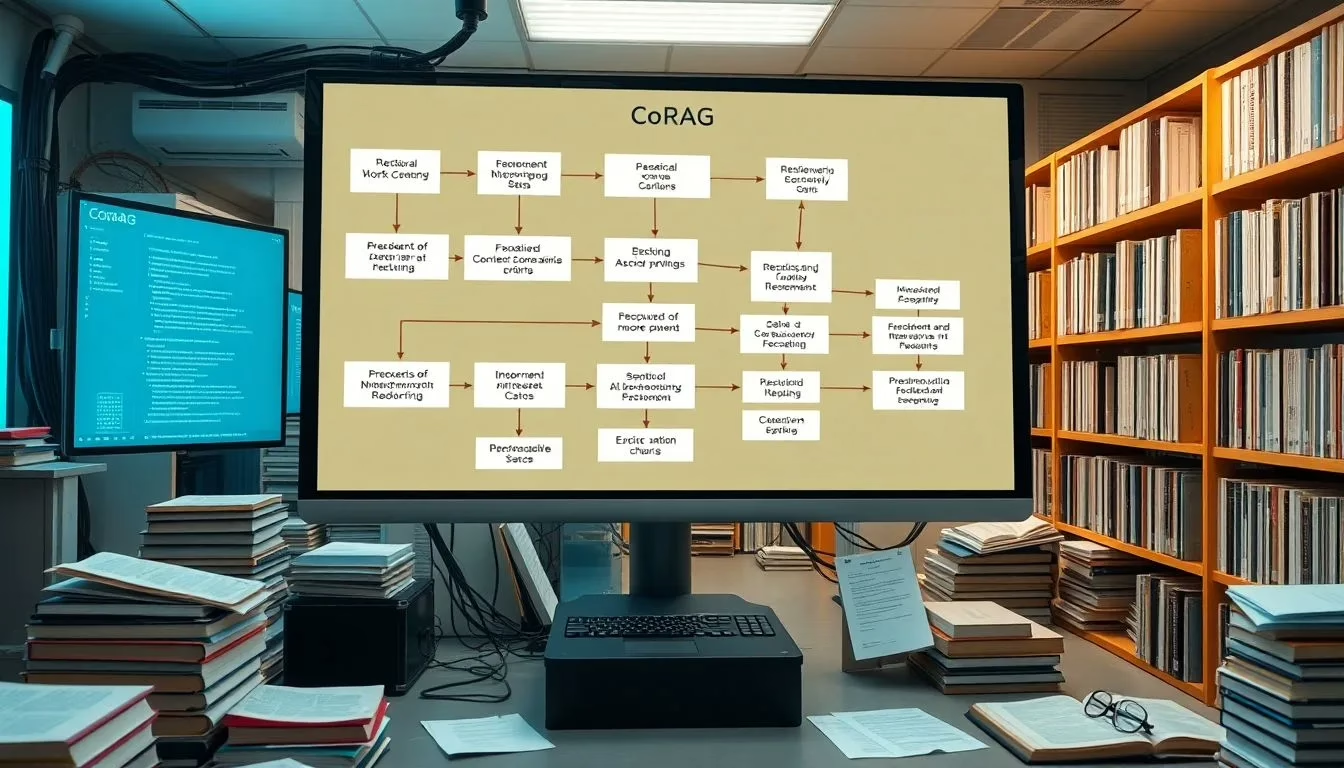

Chain-of-Retrieval Augmented Generation (CoRAG) is a groundbreaking AI technique that enhances question answering by dynamically retrieving and reasoning over relevant information step by step. Unlike conventional methods, CoRAG uses rejection sampling to generate intermediate retrieval chains, improving the accuracy of complex queries. This approach allows the model to adaptively reformulate queries based on evolving states, significantly outperforming strong baselines in multi-hop question answering tasks. CoRAG’s innovative methodology has established new state-of-the-art performance on the KILT benchmark, making it a promising solution for developing factual and grounded foundation models.

Chain-of-Retrieval Augmented Generation (CoRAG): The Future of AI Question Answering

In the rapidly evolving field of artificial intelligence, a new technique has emerged to revolutionize the way AI systems answer complex questions. Chain-of-Retrieval Augmented Generation (CoRAG) is an innovative approach that dynamically retrieves and reasons over relevant information step by step, significantly enhancing the accuracy of question answering tasks.

How CoRAG Works

Conventional AI models often struggle with complex queries due to imperfect retrieval results. CoRAG addresses this limitation by introducing a novel framework that allows the model to iteratively retrieve and reason over relevant information. This process is facilitated through rejection sampling, which automatically generates intermediate retrieval chains. Each chain consists of a sequence of sub-queries and their corresponding sub-answers, ensuring that the model can adaptively reformulate queries based on the evolving state of the reasoning process.

Training and Testing

To train CoRAG effectively, researchers utilize rejection sampling to augment existing datasets that only provide the correct final answer. This augmentation process involves generating retrieval chains through an open-source language model (LLM), which is then fine-tuned on the augmented dataset using standard next-token prediction objectives. At test time, various decoding strategies are employed to control the length and number of sampled retrieval chains, ensuring efficient test-time compute.

Decoding Strategies

CoRAG offers several decoding strategies to balance model performance and test-time compute. These include:

Greedy Decoding: This strategy generates sub-queries and their corresponding sub-answers sequentially.

Best-of-Sampling: This method involves sampling retrieval chains with a temperature and selecting the best chain to generate the final answer.

Tree Search: This approach implements a breadth-first search variant with retrieval chain rollouts, expanding the current state by sampling several sub-queries and computing the average penalty score.

Empirical Evaluation

The empirical evaluation of CoRAG demonstrates its substantial superiority over strong baselines in multi-hop question answering tasks. On the KILT benchmark, which encompasses a diverse range of knowledge-intensive tasks, CoRAG establishes new state-of-the-art performance. The model’s robustness against retrievers of varying quality and its ability to decompose complex queries make it a promising solution for future research in the RAG domain.

1. What is Chain-of-Retrieval Augmented Generation (CoRAG)?

Answer: CoRAG is an AI technique that dynamically retrieves and reasons over relevant information step by step to enhance question answering accuracy.

2. How does CoRAG differ from conventional AI models?

Answer: CoRAG uses rejection sampling to generate intermediate retrieval chains, allowing the model to adaptively reformulate queries based on evolving states.

3. What is rejection sampling in the context of CoRAG?

Answer: Rejection sampling is an automated method for generating retrieval chains by sampling an LLM based on the query and preceding sub-queries and sub-answers.

4. How is the model trained in CoRAG?

Answer: The model is fine-tuned on augmented datasets using standard next-token prediction objectives within a multi-task learning framework.

5. What are the decoding strategies employed by CoRAG?

Answer: CoRAG uses greedy decoding, best-of-sampling, and tree search to control the test-time compute and balance model performance.

6. What is the significance of the KILT benchmark in evaluating CoRAG?

Answer: The KILT benchmark is a diverse array of knowledge-intensive tasks where CoRAG establishes new state-of-the-art performance.

7. How does CoRAG handle complex queries?

Answer: CoRAG dynamically retrieves relevant information and plans subsequent retrieval steps based on the current state, mirroring human problem-solving processes.

8. What are the benefits of using CoRAG in AI question answering?

Answer: CoRAG improves factual accuracy and robustness against retrievers of varying quality, making it a promising solution for developing grounded foundation models.

9. How does CoRAG mitigate hallucination in model-generated content?

Answer: By iteratively retrieving and reasoning over relevant information, CoRAG reduces the likelihood of hallucination by ensuring that the generated responses are grounded in factual information.

10. What are the potential applications of CoRAG in real-world scenarios?

Answer: CoRAG can be applied in various real-world scenarios where accurate and factual information is crucial, such as in customer service chatbots, medical diagnosis systems, and educational platforms.

Chain-of-Retrieval Augmented Generation (CoRAG) represents a significant advancement in AI question answering by dynamically retrieving and reasoning over relevant information. Its innovative methodology, coupled with rejection sampling and diverse decoding strategies, makes it a robust and accurate solution for complex queries. As AI continues to evolve, CoRAG stands as a promising avenue for future research, poised to enhance the trustworthiness and factual accuracy of model-generated content.

+ There are no comments

Add yours